Stereo Vision Cameras

Bring true 3D depth sensing to your AGVs by mimicking human binocular vision. Stereo cameras let robots tackle messy, changing spaces, spot obstacles pinpoint accurately, and work safely with humans—no need to lean only on light-emitting sensors.

Core Concepts

Binocular Triangulation

Just like our eyes, stereo cams use two lenses spaced apart by a baseline. The system figures out depth by spotting how objects shift between the left and right views.

Disparity Mapping

The software whips up a disparity map where brighter pixels mean closer stuff (big shifts) and dimmer ones are farther away (small shifts).

Dense Point Clouds

Stereo vision spits out thick 3D point clouds packed with shape and size info, so AGVs can truly 'see' obstacles, not just detect them.

Passive Sensing

Unlike LiDAR or ToF sensors, stereo cams are passive—no light blasts means no interference from other bots' sensors in busy fleets.

Semantic Understanding

Since it's regular RGB video, you can layer on AI to spot things like 'person' versus 'pallet' for smarter choices.

Hardware Ruggedness

Today's industrial stereo cameras are all solid-state, no moving bits, so they shrug off warehouse shakes and bumps.

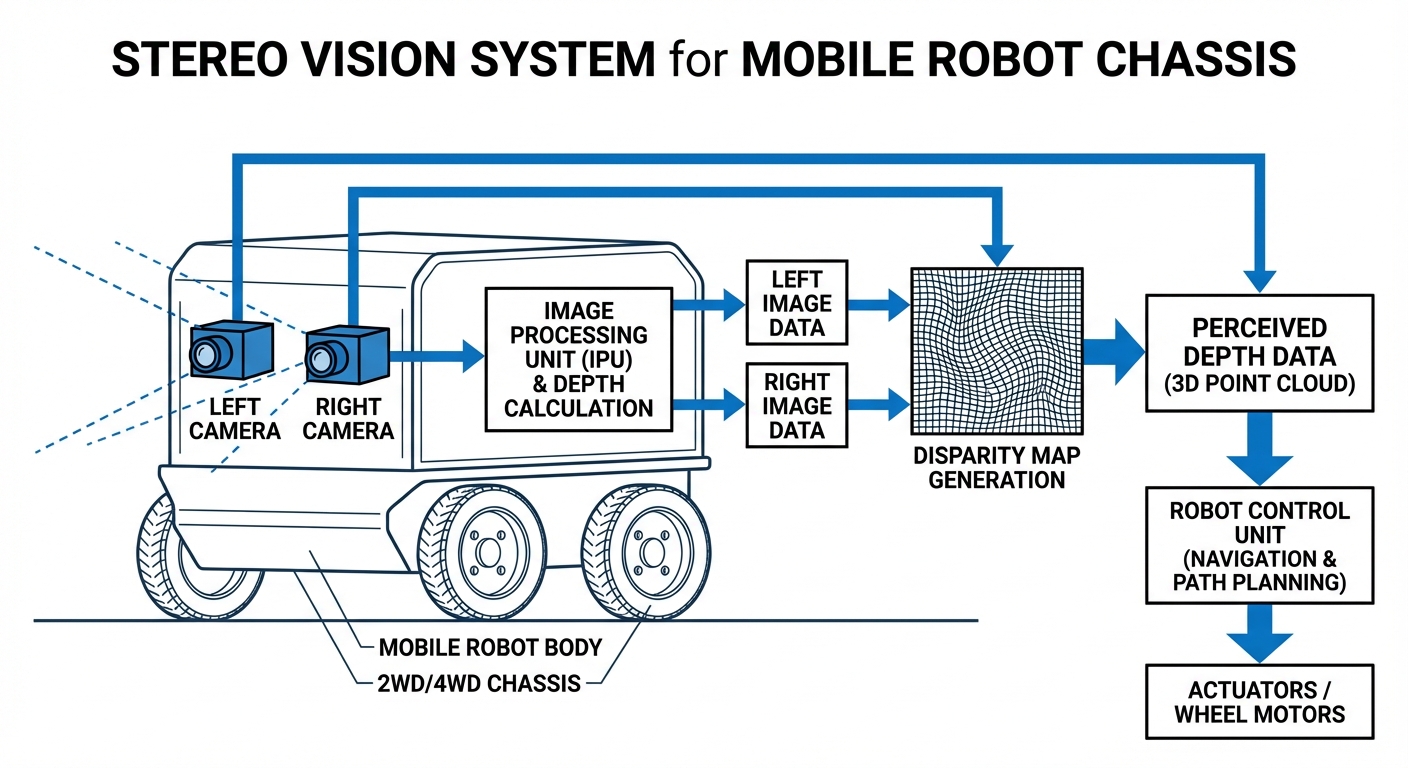

How It Works

The fundamental principle behind stereo vision is . The camera consists of two synchronized image sensors separated by a known horizontal distance, called the "baseline." When the camera captures a scene, it produces two slightly different images.

Onboard processors or a host PC then do 'stereo matching.' The algo hunts for matching features in left and right images—the gap between them (disparity) reveals depth, inversely.

This builds a depth map with Z-info for every pixel. Paired with X and Y, your AGV gets full 3D coords everywhere for killer SLAM and dodging.

Real-World Applications

Warehouse Obstacle Avoidance

AGVs in fulfillment spots use stereo to catch moving hazards LiDAR might skip, like forklift tines or dangling wires—zero crashes.

Outdoor Yard Automation

Basic IR sensors flop in bright sun. Stereo shines outdoors, perfect for AMRs shuttling between buildings or yard work.

Pallet Pocket Detection

For autonomous forklifts, stereo delivers the sharp 3D view to nail pallet slots and fork alignment, even on wonky or beat-up loads.

Negative Obstacle Detection

A must for robot safety: spotting drop-offs like docks or stairs. Stereo scans the floor plane to flag 'negative obstacles' (holes) reliably.

Frequently Asked Questions

How does Stereo Vision compare to LiDAR for AGVs?

LiDAR's great for range and accuracy but pricey with spotty vertical coverage (channel-dependent). Stereo? Cheaper, dense 3D across the view plus color for AI IDs—though it guzzles more compute.

Does Stereo Vision work in the dark?

Plain passive stereo needs ambient light for textures. But pro units pack IR pattern projectors to throw fake texture, working great in dim or pitch-black spots.

What is the typical range of a stereo camera?

Range ties to baseline (lens spacing). Short one (like 5cm) rocks for close tasks (0.2-2m); wider (20cm+) hits 10-20m for nav, but accuracy drops farther out.

Does it work on white walls or textureless surfaces?

Pure stereo flops on blank walls—no matches. That's why industrials use 'Active Stereo' with dot projectors to fake texture for the software.

What is the computational load on the robot's CPU?

Crunching high-res stereo for depth maps is heavy lifting. But new cams have built-in FPGA or ASIC brains that preprocess it onboard, lightening the load on your main CPU.

How often do these cameras need calibration?

Factory-calibrated in tough metal housings, these cams stay aligned. Recal only if smacked hard or lenses wiggle loose.

Can stereo cameras detect glass or transparent objects?

Nah, usually not. Stereo needs visible features to match. Glass? Too see-through. Pair it with ultrasonics to cover that gap.

Is it compatible with ROS/ROS2?

Yep, all big industrial stereo makers offer solid ROS drivers. They pump out standard msgs like `sensor_msgs/Image`, `sensor_msgs/CameraInfo`, and `sensor_msgs/PointCloud2`.

How does sunlight affect performance?

Unlike structured light (old Kinect-style) that hates sun, stereo handles outdoors fine. But lens glare or wild contrasts (deep shade vs. blaze) can fuzz accuracy.

What is the latency typically involved?

With onboard crunching, latency's snappy—15-30ms based on res and FPS. Plenty quick for warehouse AGVs cruising at 2-3 m/s.

Can I use stereo vision for SLAM?

Totally. vSLAM is a star use. Track steady 3D features over time to gauge motion and map on the fly.

What happens if one lens is blocked?

Block one lens? Depth's gone, like squinting one eye—down to mono. Systems flag errors to halt or swap sensors.