2D and 3D Lidar

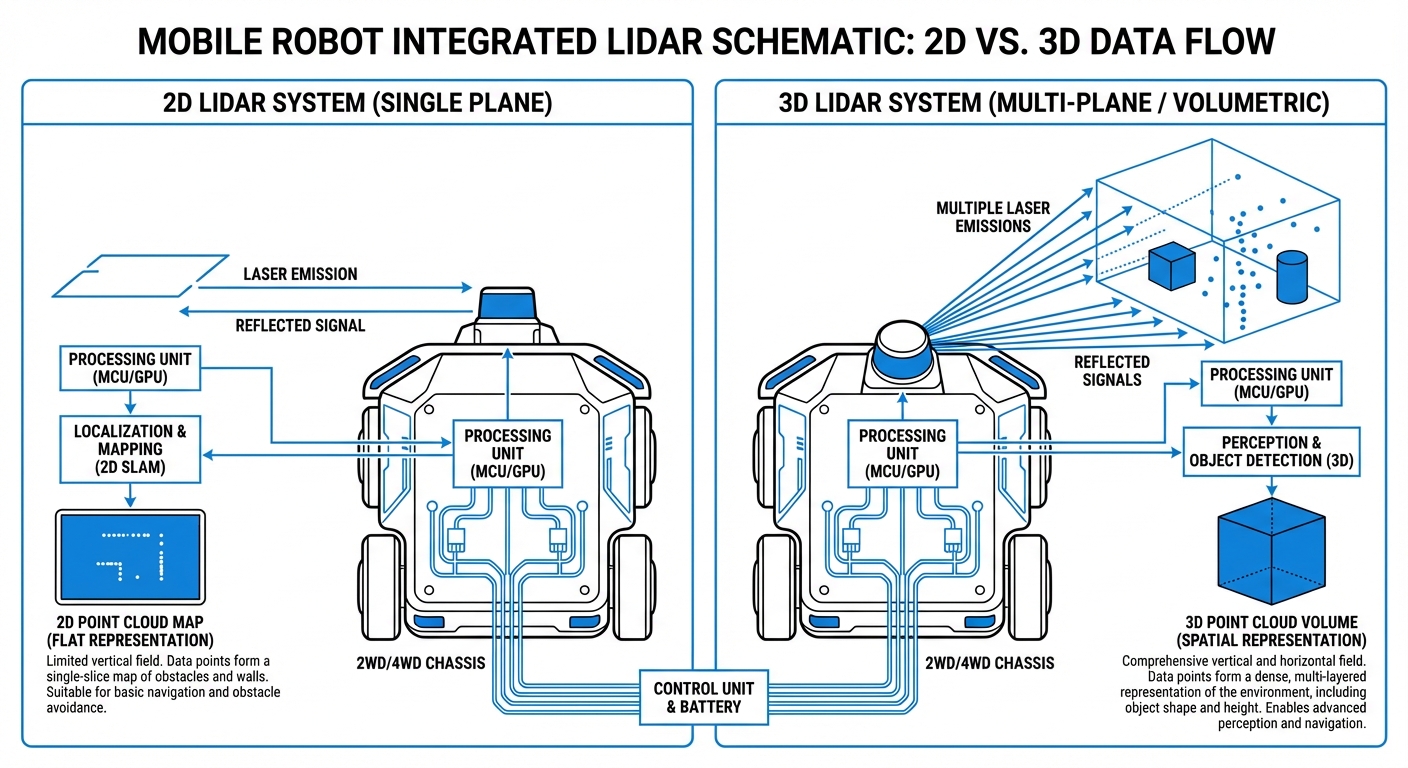

Light Detection and Ranging (Lidar) is the main eyes for autonomous mobile robots. Whether it's flat 2D scanning for safety and mapping or full 3D sensing for tricky object spotting, Lidar gives AGVs millimeter-precise navigation in changing spaces.

Core Concepts

Time of Flight (ToF)

It boils down to shooting laser pulses and measuring the return trip time. That math pins down exact distances to surfaces, creating the foundation for spatial maps.

2D Planar Scanning

A single laser beam spins on a horizontal plane, slicing a 2D "slice" of the world—perfect for floor plans and setting firm safety stop zones for AGVs.

3D Volumetric Cloud

It stacks multiple vertical laser channels for a thick point cloud, helping robots spot overhangs, slopes, and specific objects.

SLAM Navigation

Simultaneous Localization and Mapping lets the robot build a map of unknown spaces while tracking its spot in it, all powered by Lidar data.

Reflectivity & Remission

Lidar sensors gauge the returned light's intensity, helping tell retro-reflective tape (those nav markers) from regular walls or dark obstacles.

Functional Safety (PL-d)

Safety-rated 2D Lidars are essential for OSHA compliance, with redundant checks inside to make sure the robot stops dead if a human steps into the warning zone.

How It Works: From Laser to Logic

Lidar's heart is the emitter-receiver combo. A laser diode fires quick light pulses, usually infrared (905nm or 1550nm). Mechanical versions use a spinning mirror to sweep the beam across the scene, often 360 degrees horizontally.

When light hits something, it scatters and some bounces back to the sensor. Distance is calculated from light speed ($$ d = \frac{c \times t}{2} $$)—happening hundreds of thousands of times a second.

Solid-state Lidar

That "Point Cloud" feeds straight into the robot's navigation system—a 2D occupancy grid or 3D volumetric voxel map for smart path planning.

Real-World Applications

Warehouse Intralogistics

2D Safety Lidars are standard on autonomous forklifts, creating dynamic "protective fields" that stretch longer at higher speeds so the AGV can stop safely before clipping a person or pallet.

Outdoor AMR Delivery

Last-mile delivery robots rely on 3D Lidar for sidewalks, using the height data to spot curbs, drop-offs, and tell a flat paper bag from a solid concrete block.

Cleanroom & Hospital

In sterile setups, high-precision Lidar guides robots through tight corridors without guide tape—3D is key for catching hanging wires or open drawers that 2D might overlook.

Heavy Manufacturing

Large parts-hauling robots fuse data from 2-4 Lidars for a full 360-degree safety bubble around huge payloads blocking the main sensors.

Frequently Asked Questions

What is the primary difference between 2D and 3D Lidar for navigation?

2D Lidar sweeps one horizontal plane for X and Y coords—great for indoor mapping and safety stops, but it misses stuff above or below the line (like tabletops). 3D adds the Z-axis for a full volumetric cloud, letting robots grasp object shapes and tackle messy, unstructured spaces.

Can I use a navigation Lidar for safety functions?

Usually no. Safety Lidars hit strict standards (ISO 13849 PL-d, Category 3) with redundant designs to fail safely. Standard nav Lidars just supply mapping data without the certified reliability for protecting lives in factories.

How does Lidar handle glass and mirrors?

Transparent stuff like glass or shiny mirrors are Lidar's weak spot—light passes through or bounces away, spawning "ghost" obstacles or blind walls. Fixes include sensor fusion (like ultrasonics) or software that spots and hides these in the map.

What is the advantage of Solid-State Lidar over Mechanical Lidar?

Mechanical Lidars physically spin, wearing out motors and bearings in high-vibe AGV spots. Solid-state has zero moving parts for much better durability and life, though often trading off a narrower Field of View (under 360°).

How does environmental dust or fog affect Lidar performance?

Dust and particles can fake obstacles by reflecting lasers early. Premium industrial Lidars use "multi-echo" to check multiple returns per pulse, ignoring weak dust hits and grabbing the strong one from the real wall behind.

What is the typical range required for warehouse AGVs?

For indoor warehouses, 20-30 meters range works fine for SLAM localization. But high-speed forklifts need safety fields based on stopping distance—at 2 m/s, that's often 10-15 meters of certified reliable range to brake safely.

Why is angular resolution important?

Angular resolution sets point density at distance. Spot a skinny table leg at 10m? Coarse 1.0° might skip it; finer 0.1° or 0.25° catches it every time.

How do multiple robots prevent Lidar crosstalk/interference?

Crowded robot zones mean one laser can blind another. Makers counter with pulse encoding, slight freq shifts, or software filters that ditch mismatched timings.

Does 3D Lidar require more processing power than 2D?

Yes, a ton more. 2D might hit hundreds of points per spin; 3D pumps out hundreds of thousands per second. Handling it (segmenting, ground removal, classifying) demands beefy onboard GPUs or FPGAs, hitting battery and BOM hard.

What is extrinsic vs. intrinsic calibration?

Intrinsic calibration is the sensor's own factory-tuned accuracy. Extrinsic is nailing its mount position relative to the robot's center (base_link). Off by a degree or cm? Maps twist, nav crashes.

Can cameras replace Lidar completely?

Visual SLAM with stereo cams competes, but Lidar shines in zero-light reliability and direct precise distances without stereo's heavy math. Top robots fuse both.

What is the cost difference between 2D and 3D implementations?

2D Lidars are old-school cheap—basic nav under $100, safety-rated around $1,000. 3D's pricier at $2,000-$10,000+ based on channels and range, saved for bots that need that 3D view.