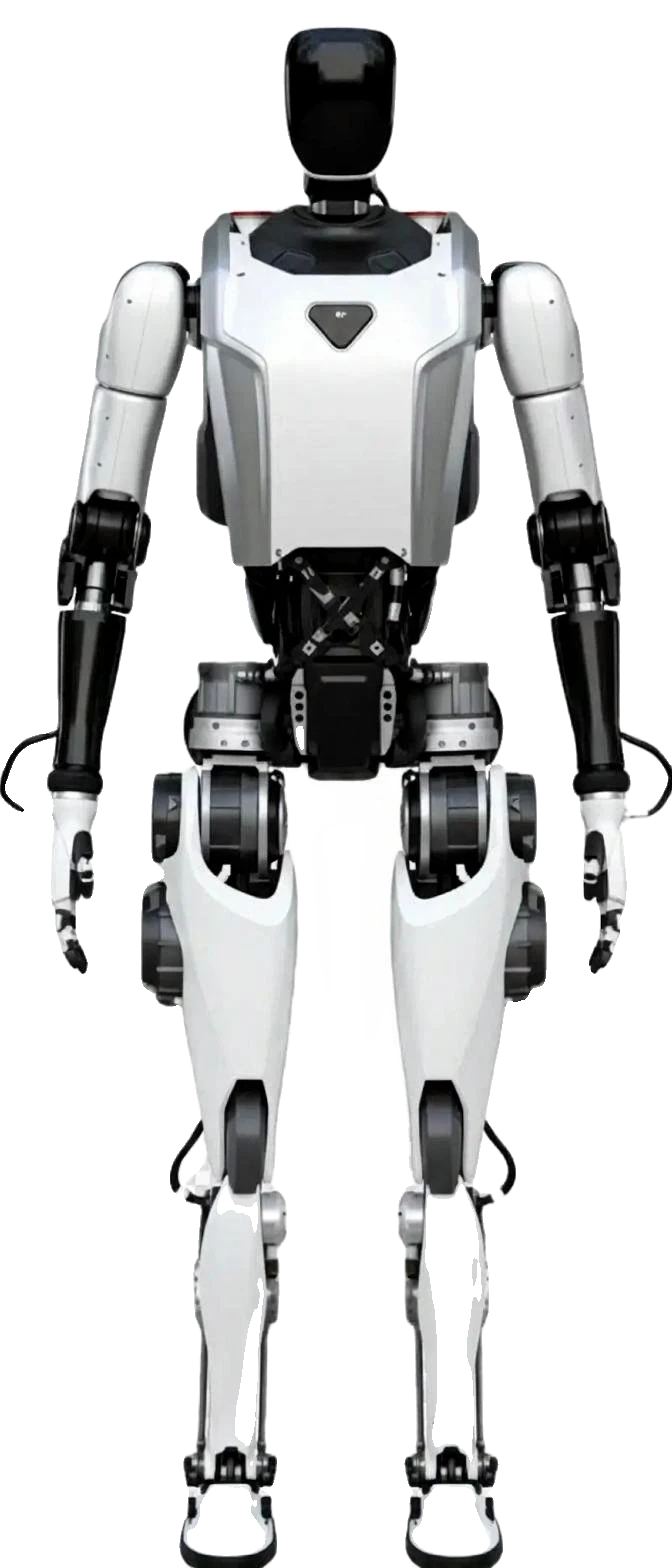

Qinglong V3.0

Qinglong V3.0 is a research humanoid robot by OpenLoong designed for locomotion, manipulation, and whole-body control studies.

Description

Key Features

Modular Bionic Design

Highly replaceable modules for head, arms, hands, legs, and chassis, enabling easy upgrades and adaptation to diverse tasks with biomimetic joint ranges exceeding human limits in some axes.

High-Performance Computing

400 TOPS heterogeneous compute with dual CPUs/GPUs for real-time AI processing, sensor fusion, and control at 2 kHz frequency via EtherCAT.

Dexterous Manipulation

Hands with 12+ DoF, 5 fingers, tactile sensors, supporting 4-6 kg per arm payload, precise grasping, and object handling up to 5 kg.

Advanced Locomotion

Stable walking at 5 km/h, running up to 2 m/s, slope traversal (20°), stair climbing (15 cm), and unstructured terrain navigation with compliant ankle/hip control.

Open-Source Ecosystem

Full hardware schematics, dynamics control (MPC/WBC), simulation (GymLoong), and software stack available on GitHub for global collaboration.

Multimodal Perception

3D LiDAR, depth/RGB cameras, tactile arrays, and audio for comprehensive environmental understanding and low-latency action.

Specifications

| Availability | Early production / limited release |

|---|---|

| Nationality | China |

| Website | https://www.openloong.net/ |

| Degrees Of Freedom, Overall | 40 |

| Degrees Of Freedom, Hands | 12 |

| Height [Cm] | 165 |

| Manipulation Performance | 3 |

| Navigation Performance | 4 |

| Max Speed (Km/H) | 5 |

| Strength [Kg] | ~4–6 kg payload per arm (assumed) |

| Weight [Kg] | 75 |

| Runtime Pr Charge (Hours) | 3 |

| Safe With Humans | Yes |

| Cpu/Gpu | Industrial CPU + AI accelerator / GPU (assumed) |

| Camera Resolution | RGB + depth cameras, ~1080p |

| Connectivity | Ethernet, USB, Wi‑Fi |

| Operating System | Linux-based / ROS |

| Llm Integration | Possible via external system integration |

| Latency Glass To Action | 150–250 ms |

| Motor Tech | Electric servo motors |

| Gear Tech | Harmonic drive + planetary gears |

| Number Of Fingers | 5 per hand |

| Main Market | Academic research, humanoid R&D |

| Verified | Not verified |

| Walking Speed [Km/H] | 4.5 |

| Shipping Size | ~185 × 85 × 65 cm |

| Color | White |

| Manufacturer | OpenLoong |

| Degrees Of Freedom Overall | 40-43 |

| Degrees Of Freedom Hands | 12-19 |

| Height Cm | 165-185 |

| Weight Kg | 75-85 |

| Max Speed Kmh | 5 |

| Payload Kg | 20 (total), 4-6 per arm |

| Battery | ≥3 hours runtime, chest-mounted with fixing plates (kWh unspecified) |

| Compute | Dual 2.2 GHz CPUs + dual 930 MHz GPUs, 400 TOPS |

| Sensors | 3D LiDAR, RGB-D cameras (~1080p), tactile fingertip/palm arrays, torque sensors, encoders, microphone array, IMUs |

| Actuators | Electric servo motors with harmonic drives (upper), planetary/QDD (lower), max torque 396 Nm |

| Communication | EtherCAT bus (2 kHz), Ethernet, USB, Wi-Fi |

| Os | Linux-based / ROS |

| Materials | Engineering metals (aluminum/steel alloys for frames/brackets), lightweight bionic structures |

| Thermal Management | Not specified; modular design aids heat dissipation |

Curated Videos