Sunday Robotics

MEMO

Memo by Sunday is a wheeled home robot that uses ACT-1 and real-home training data to clear tables, load dishwashers and fold laundry autonomously.

Description

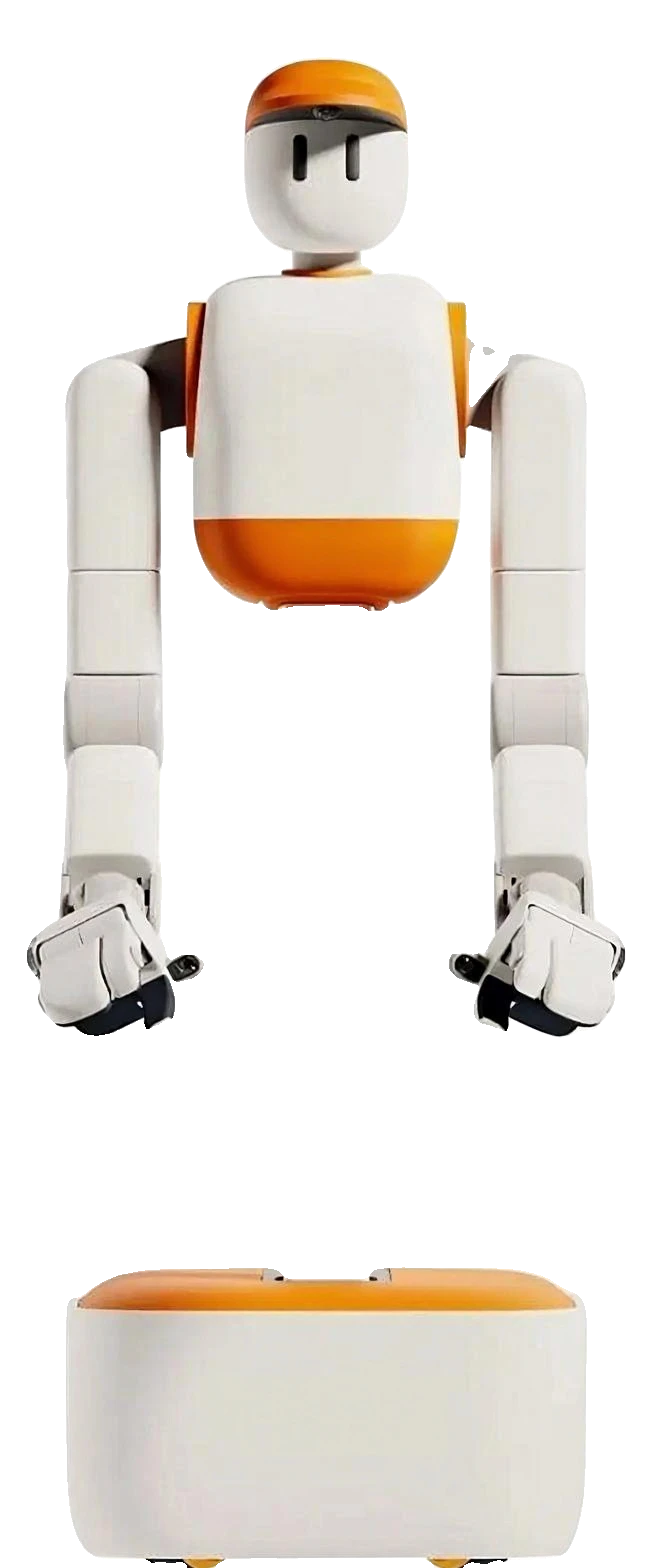

MEMO, developed by Sunday Robotics (operating under sunday.ai), is a pioneering wheeled humanoid home robot unveiled in November 2025, designed specifically for domestic chores in busy households. Unlike traditional legged humanoids that struggle with balance and energy efficiency, MEMO features a stable wheeled base with passive stability—ensuring it won't fall even if power is cut—and a height-adjustable torso that lowers to ground level or extends arms up to 2.1 meters vertically and 0.8 meters horizontally, resting at 1.7 meters (170 cm) for most tasks. Weighing 77 kg (170 lbs), it prioritizes safety with compliant actuators that yield to human touch, a soft silicone outer shell free of sharp edges, and IP66/IP67 ingress protection on arms and hands. The dual arms each offer 7 degrees of freedom (DoF), paired with 4 DoF pincer grippers (4 fingers) precisely mirroring the company's Skill Capture Glove™, enabling dexterous manipulation of delicate items like wine glasses or utensils.

At its core is the ACT-1 foundation model, a revolutionary robot AI trained on zero teleoperation or robot-generated data. Instead, ACT-1 leverages over 10 million episodes of human movements captured from more than 2,000 Skill Capture Gloves worn by 'Memory Developers' in diverse real-world US homes—from studio apartments to family houses with pets and clutter. The glove, equipped with force sensors, cameras, and joint potentiometers, records 'memories' of chores, which are transformed via 'Skill Transform' (90% fidelity) into robot-equivalent trajectories. This end-to-end model integrates long-horizon manipulation with map-conditioned navigation, generating actions from millimeter-precision grasps to meter-scale movement, conditioned on 3D environment maps for zero-shot generalization to unseen homes. ACT-1 enables emergent behaviors like switching grasp types or handling novel objects intuitively, as if a 'Master Chef' observing diverse kitchens.

Deployment history began in December 2024 with basic one-armed shoe arrangement, evolving by October 2025 to complex demos: autonomously clearing dining tables (33 unique interactions across 21 objects, navigating 130+ ft to load dishwashers, dump scraps), folding six pairs of socks from clutter (delicate pinches, stretching), and pulling espresso shots (bimanual tamping, high-torque insertion). These 5x-accelerated videos showcase room-scale autonomy without human intervention. Sunday's 48-hour iteration cycle—design, review, train (fine-tuning with data recipes/augmentation), evaluate in new environments—drives monthly skill library expansions. No user data is required for training; privacy is paramount, with consent for beta feedback.

MEMO's architecture emphasizes pragmatic engineering: onboard compute (likely GPU-accelerated, Linux-based stack) for low-latency glass-to-action, collision avoidance for dynamic obstacles, and self-charging capability in beta. Current prototype costs ~$20,000/unit (hand-built), targeting 50%+ reduction via scale for 2027-2028 launch. Beta program (late 2026, invite-only) deploys limited units to 'Founding Families' for refinement in hygiene, durability, and interaction. This data-centric approach breaks robotics' scaling deadlock, positioning MEMO as the first truly home-ready helper, adapting to chaos without setup.

Key Features

Zero Robot Data Training

Powered by ACT-1 model trained on 10M+ human glove-captured movements for intuitive, generalizable skills without teleop.

Long-Horizon Autonomy

Handles complex chores like table-to-dishwasher (130+ ft navigation, 33 interactions) in unseen homes via 3D maps.

Passive Safety & Stability

Wheeled base prevents falls, compliant limbs yield to touch, soft IP67 silicone shell safe for kids/pets.

Dexterous Manipulation

7DoF arms + 4DoF hands grip delicate glassware, fold socks, tamp espresso with force-sensitive precision.

Rapid Iteration

48-hour cycle adds skills monthly; adapts to novel objects/environments zero-shot.

Specifications

| Availability | Prototype |

|---|---|

| Nationality | US |

| Website | https://www.sunday.ai |

| Degrees Of Freedom, Overall | 20 |

| Degrees Of Freedom, Hands | 3 |

| Height [Cm] | 170 |

| Manipulation Performance | 2 |

| Navigation Performance | 2 |

| Max Speed (Km/H) | 3.6 |

| Strength [Kg] | Not spesified |

| Weight [Kg] | 77 |

| Runtime Pr Charge (Hours) | 4 |

| Safe With Humans | Yes |

| Cpu/Gpu | Likely onboard GPU-accelerated compute plus edge services for ACT-1 |

| Operating System | Not disclosed; internal robotics stack running ACT-1; likely Linux-based |

| Llm Integration | Uses ACT-1 robot foundation model for policy and planning; no public integration with general-purpose chat LLMs announced |

| Main Structural Material | Internal structure with rigid + elastic polymers, soft silicone outer shell |

| Number Of Fingers | 4 |

| Main Market | Domestic home chores |

| Verified | Not verified |

| Walking Speed [Km/H] | 3.6 |

| Manufacturer | Sunday Robotics |

| Height | 170 cm (adjustable; vertical reach 210 cm, horizontal reach 80 cm) |

| Weight | 77 kg |

| Dof Overall | 22+ (arms: 2x7 DoF, hands: 2x4 DoF, plus base/wheels) |

| Max Speed | 1 m/s (3.6 km/h; software-limited to 50-80% human pace) |

| Battery | 4 hours runtime, 1 hour charge time; self-charging in beta |

| Ip Rating | Hands: IP67, Lower arms: IP66 |

| Materials | Rigid + elastic polymers (internal), soft silicone shell (outer) |

| Sensors | Force sensors, cameras, joint potentiometers (hands match Skill Capture Glove); unspecified RGB/ depth cameras for vision, IMUs presumed |

| Processors | Onboard GPU-accelerated compute (likely NVIDIA-based for AI inference); edge services for ACT-1 |

| Motors Actuators | Compliant actuators (electric; unspecified models); high-torque for tool use |

| Grippers | Pincer-style, 4 fingers, widened grasp range for diverse objects |

| Mobility | Wheeled base with low CoG for passive stability |

| Os | Linux-based internal stack |

| Ai Model | ACT-1 (end-to-end foundation model for manipulation + navigation) |

| Other | Collision avoidance (static/dynamic), 3D map-conditioned navigation |

Curated Videos