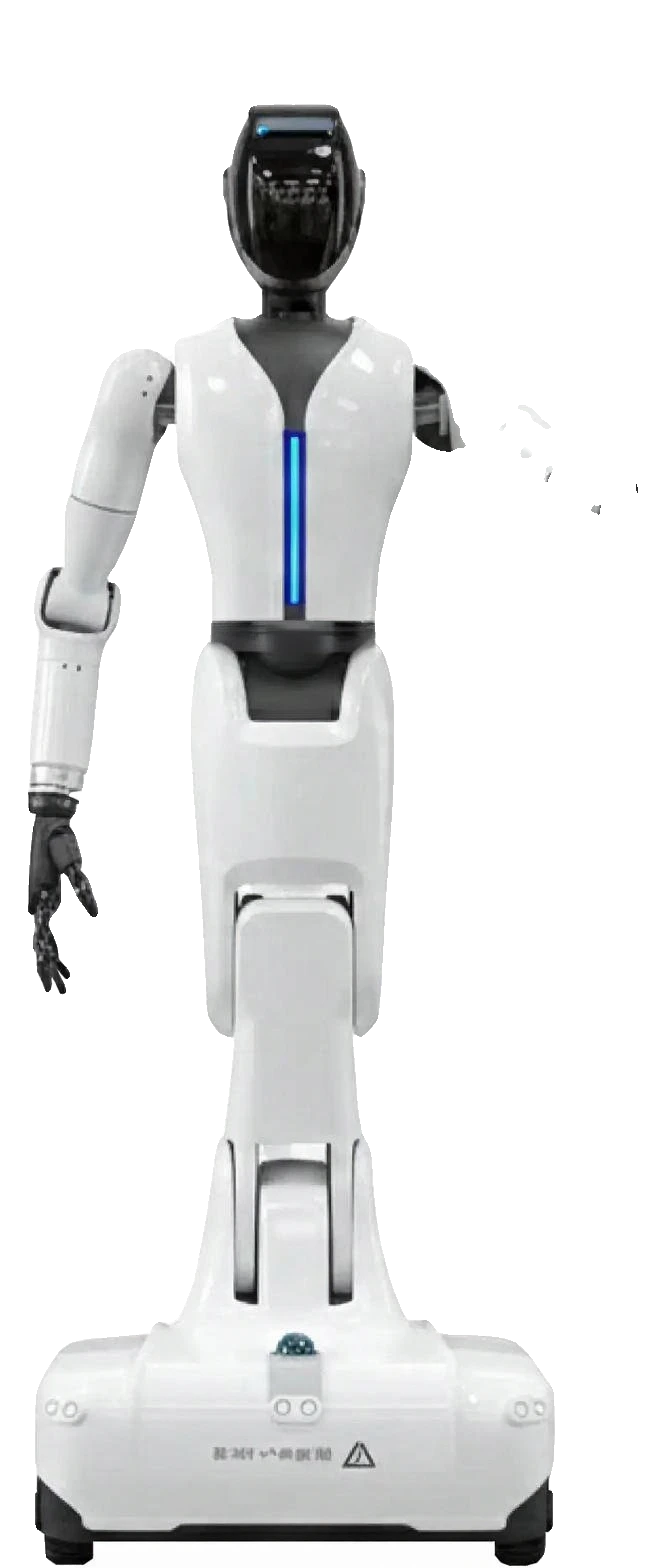

Maker H01

Maker H01 is a wheeled humanoid from GigaAI — pairing dual 7-DOF arms, rich sensing, and agile mobility to bring embodied AI into real-world tasks with flexibility and precision.

Description

Key Features

Wheeled Omnidirectional Mobility

All-wheel-drive chassis with 360° LiDAR and ultrasonic sensors enables agile navigation, obstacle avoidance, and stable movement at up to 1.5 m/s in dynamic environments.

Dual 7-DOF Bionic Arms

28 total DOF (excl. end-effectors), 0-2m reach, 5kg max payload per arm for dexterous manipulation, adaptable grippers/hands for complex tasks like stacking and pouring.

Rich Multi-Modal Sensing

9 cameras (5 RGB + 4 RGBD on head/chest/hands) + LiDAR provide comprehensive perception for safe human interaction and precise operations.

GigaBrain-0 VLA Control

End-to-end embodied brain for structured planning and action from vision/language inputs, supporting long-sequence generalization and high success rates.

GigaWorld-0 Data Engine

World model generates scalable synthetic data, boosting training efficiency and closing Sim2Real gaps for rapid model iteration.

Physical AGI Native Design

Modular hardware-software stack optimized for research, prototyping, and deployment in home, industrial, and service scenarios.

Specifications

| Availability | Prototype |

|---|---|

| Nationality | China |

| Degrees Of Freedom, Overall | 28 |

| Degrees Of Freedom, Hands | 14 |

| Height [Cm] | 160 |

| Manipulation Performance | 4 |

| Navigation Performance | 3 |

| Max Speed (Km/H) | 8 |

| Strength [Kg] | 5–10 kg per arm |

| Weight [Kg] | 64 |

| Runtime Pr Charge (Hours) | 4 |

| Safe With Humans | Yes |

| Ingress Protection | IP20 |

| Connectivity | Bluetooth, Ethernet, Wi‑Fi |

| Operating System | Likely Linux + custom robot control stack |

| Latency Glass To Action | 250–450 ms |

| Main Structural Material | Aluminum alloy + ABS composite shells |

| Number Of Fingers | 5 per hand |

| Main Market | Home service, Hospitality, reception, Workplace assistance, light logistics, education |

| Verified | Not verified |

| Shipping Size | 170 cm × 70 cm × 50 cm |

| Color | White |

| Manufacturer | GigaAI |

| Height | 162 cm |

| Weight | 88 kg |

| Dof Overall | 28 (excluding end-effectors) |

| Dof Arms | 14 (7-DOF per bionic arm) |

| Payload Per Arm | 5 kg max / 3 kg rated |

| Reach | 0-2 m vertical |

| Mobility | Omnidirectional wheeled chassis, max speed 1.5 m/s (5.4 km/h) |

| Sensors | 5x RGB cameras, 4x RGBD cameras (head/chest/hands), 360° LiDAR, ultrasonic sensors (specific models N/A) |

| Vision | Multi-modal RGB/RGBD fusion |

| Processors | N/A (inferred high-end GPU for VLA/world model inference) |

| Os | Likely Linux + custom robot stack |

| Connectivity | Bluetooth, Ethernet, Wi-Fi |

| Battery | 4 hours runtime (kWh/voltage N/A) |

| Latency | 250-450 ms glass-to-action |

| Materials | Aluminum alloy frames + ABS composite shells |

| Ip Rating | IP20 |

| End Effectors | Multi-finger grippers / dexterous hands (modular) |

| Color | White |

| Shipping Size | 170 cm × 70 cm × 50 cm |

Curated Videos