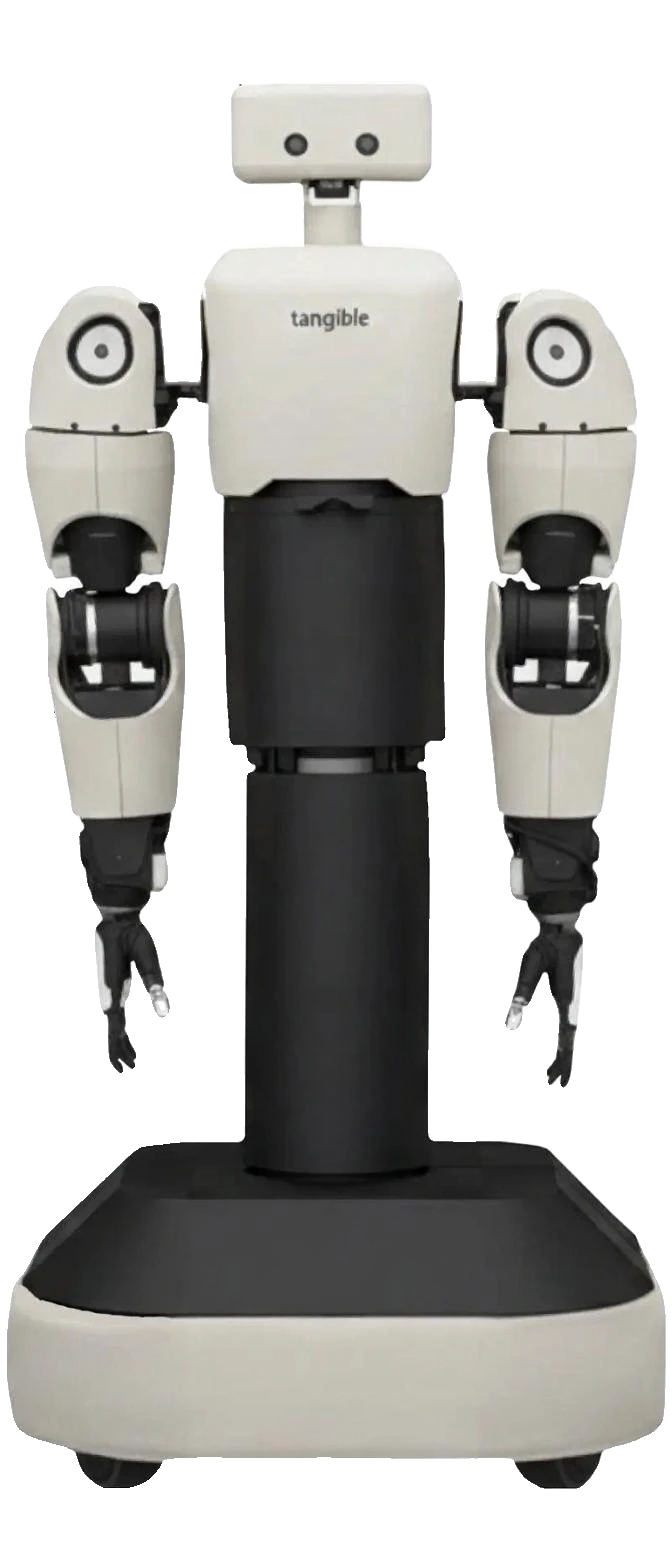

Eggie

Eggie is a friendly mobile humanoid robot designed for interaction, education, and light service tasks. It combines expressive arms with smooth wheeled mobility, offering an approachable and flexible platform for AI-driven communication.

Description

Key Features

Dexterity and Anthropomorphic Hands

Five-fingered bionic hands with precise manipulation for handling everyday objects like mugs and cloths, supporting 1-2 kg payloads per arm.

Compliance and Whole-Body Control

Designed for contact-rich interactions in cluttered spaces, using compliant control to safely navigate and manipulate in unpredictable home environments.

Wheeled Mobility

Smooth indoor navigation at 3 km/h, avoiding bipedal complexities while maintaining humanoid expressiveness for natural interaction.

Advanced AI Integration

Combines imitation learning, RL, VLA models, and multimodal training on real-world data for low-latency (<200 ms) autonomous behaviors.

Real-World Data Learning

Trained on thousands of interactions from hundreds of diverse environments, enabling robust performance beyond simulations.

Specifications

| Availability | Prototype |

|---|---|

| Nationality | US |

| Website | https://tangiblerobots.ai/ |

| Degrees Of Freedom, Overall | 20 |

| Degrees Of Freedom, Hands | 3 |

| Height [Cm] | 160 |

| Manipulation Performance | 2 |

| Navigation Performance | 3 |

| Max Speed (Km/H) | 3 |

| Strength [Kg] | 1–2 kg per arm |

| Weight [Kg] | 45 |

| Runtime Pr Charge (Hours) | 7 |

| Safe With Humans | Yes |

| Cpu/Gpu | Likely NVIDIA Jetson or similar embedded AI board |

| Ingress Protection | IP20 |

| Camera Resolution | Dual 1080p depth + RGB |

| Connectivity | Bluetooth, Wi‑Fi |

| Operating System | Linux-based ROS environment |

| Llm Integration | Likely via cloud API (OpenAI, Alibaba Qwen, Baidu, etc.) |

| Latency Glass To Action | <200ms (End-to-End) |

| Motor Tech | Electric servo motors |

| Gear Tech | Plastic/composite gear trains |

| Main Structural Material | Aluminum frame + composite panels |

| Number Of Fingers | 5 per hand |

| Main Market | exhibitions, Home assistance, Research & education |

| Verified | Not verified |

| Color | White |

| Manufacturer | Tangible Robots |

| Height Cm | 160 |

| Weight Kg | 45 |

| Dof Overall | 20 |

| Dof Hands | 3 |

| Max Speed Kmh | 3 |

| Payload Per Arm Kg | 1-2 |

| Runtime Hours | 7 |

| Cpu Gpu | Likely NVIDIA Jetson or similar embedded AI board |

| Os | Linux-based ROS environment |

| Cameras | Dual 1080p depth + RGB |

| Connectivity | Bluetooth, Wi-Fi |

| Ip Rating | IP20 |

| Motors | Electric servo motors |

| Gears | Plastic/composite gear trains |

| Materials | Aluminum frame + composite panels |

| Fingers Per Hand | 5 |

| Llm Integration | Likely via cloud API (OpenAI, etc.) |

| Latency Ms | <200 (end-to-end) |

| Safety | Safe with humans: Yes |

| Color | White |

| Price Usd | 32000 |

Curated Videos