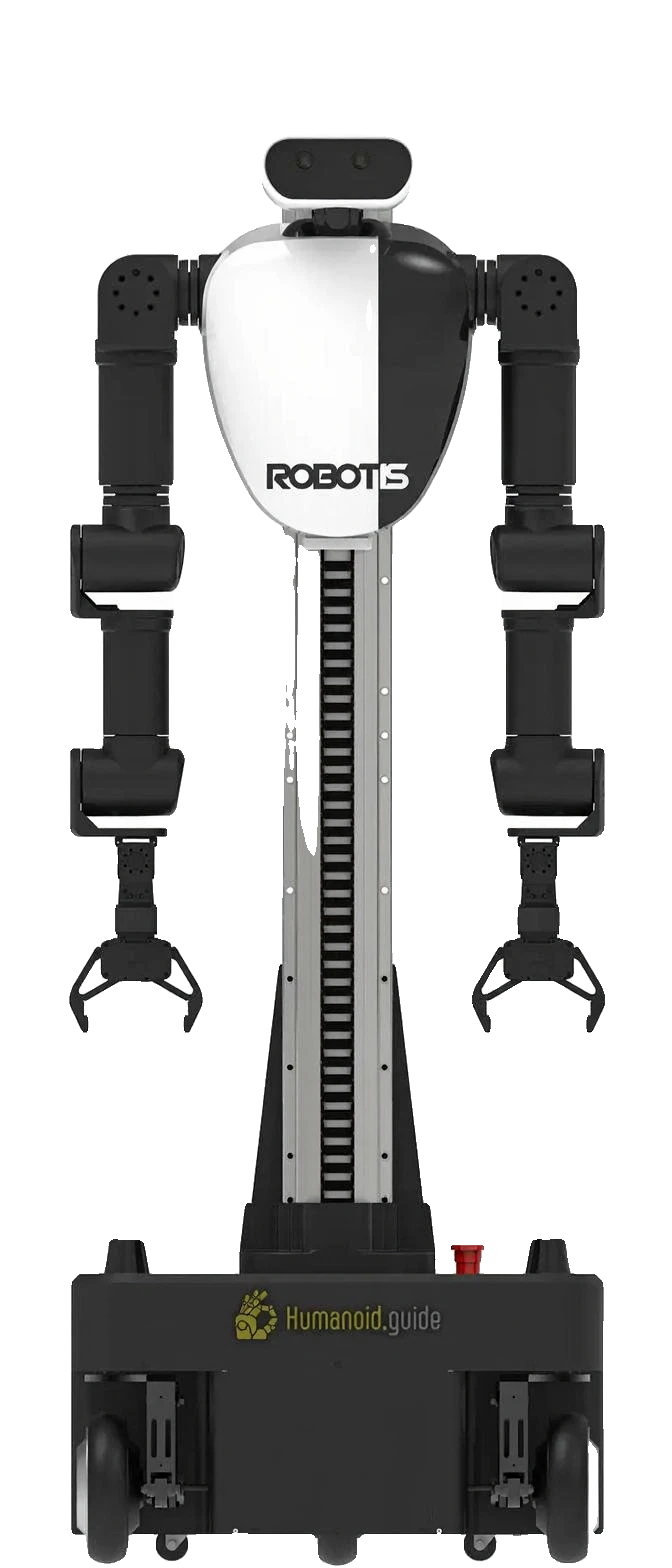

AI Worker

Semi‑humanoid robot that learns from human demos to automate complex industrial tasks, increasing productivity and relieving labor shortages.

Description

Key Features

Physical AI Imitation Learning

Learns complex tasks from human teleoperation demos via end-to-end pipeline: data collection, visualization, training, and inference with GR00T N1.5 VLA model.

High-DOF Bimanual Manipulation

Dual 7-DOF arms with RH-P12-RN grippers (5kg payload, adaptive passive joints) enable precise handling of delicate objects like wiring and assembly.

Swerve Drive Mobility

Omnidirectional 6-DOF base (1.5 m/s) with independent wheel steering/driving for superior traction, precision, and efficiency in tight industrial spaces.

Real-Time Perception

Multi-camera suite (ZED Mini head, RealSense D405 hands) + LiDARs + IMU for SLAM, obstacle avoidance, and close-range grasping.

Open-Source Ecosystem

Full access to ROS2 code, simulations, datasets, and hardware designs for rapid R&D and customization.

ROS2 Integration

ros2_control at 100Hz for modular, real-time joint/velocity control with safety arbitration.

Specifications

| Availability | In production |

|---|---|

| Nationality | South Korea |

| Website | https://ai.robotis.com/ |

| Degrees Of Freedom, Overall | 25 |

| Degrees Of Freedom, Hands | 7 |

| Height [Cm] | 162 |

| Manipulation Performance | 2 |

| Navigation Performance | 2 |

| Max Speed (Km/H) | 5.4 |

| Strength [Kg] | 6 |

| Weight [Kg] | 90 |

| Runtime Pr Charge (Hours) | 4 |

| Safe With Humans | Yes |

| Cpu/Gpu | AGX Orin, Nvidia Jetson |

| Connectivity | Wi‑Fi |

| Operating System | Linux, ROS 2 |

| Llm Integration | ACT, GR00T, PI |

| Motor Tech | DYNAMIXEL |

| Gear Tech | DYNAMIXEL DRIVE (DYD) |

| Main Structural Material | Aluminium, Plastic |

| Number Of Fingers | 4 |

| Main Market | logistics, Manufacturing, R&D |

| Verified | Not verified |

| Manufacturer | Robotis |

| Height Cm | 162.3 |

| Weight Kg | 90 |

| Dof Total | 25 |

| Dof Arms | 7 x 2 |

| Dof Grippers | 1 x 2 |

| Dof Head | 2 |

| Dof Lift | 1 |

| Dof Mobile | 6 |

| Arm Reach Mm | 641 |

| Payload Nominal Kg | 3 single / 6 dual |

| Payload Peak Kg | 5 single / 10 dual |

| Max Speed M S | 1.5 |

| Processors | NVIDIA Jetson AGX Orin 32GB (Jetpack 6.2) |

| Os | ROS 2 Jazzy (Docker) |

| Motors | DYNAMIXEL-Y/P/X series (YM080-230, YM070-210, PH42-020, XH540-V150, XH430-V210); RH-P12-RN grippers |

| Sensors | head_camera: Stereolabs ZED Mini (2208x1242, 102°x57° FOV, 0.1-9m depth, 6DoF IMU), hand_cameras: Intel RealSense D405 x2 (1280x720, 87°x58° FOV, 7-50cm depth), lidar: x2 (models unspecified), imu: Integrated in ZED Mini and actuators |

| Battery | 25V 80Ah (2040Wh) LiPo |

| Materials | Aluminum, Plastic |

| Comm | Ethernet/Wi-Fi 6, RS-485 @4Mbps |

| Control Rate | 100Hz (ros2_control) |

| Operating Temp | 0-40°C |

| Gripper Payload | 5kg |

Curated Videos